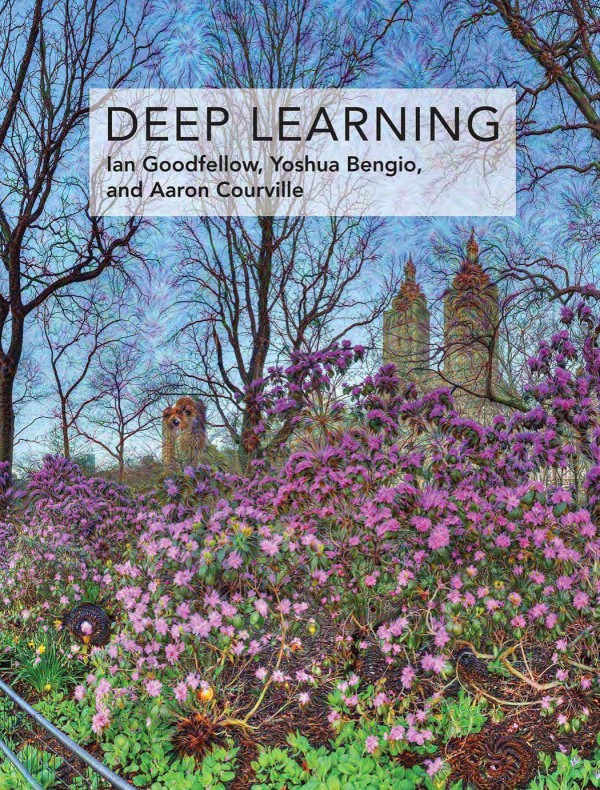

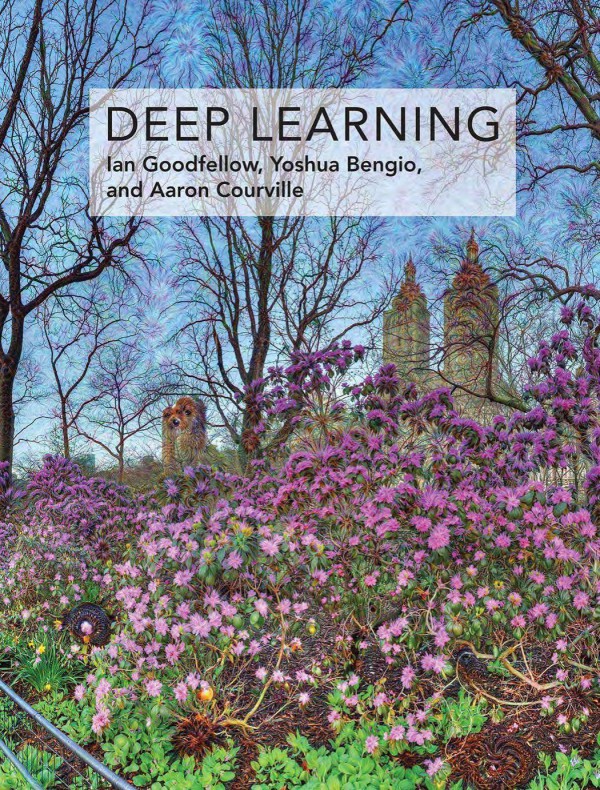

Deep Learning 1st Edition by Ian Goodfellow, Yoshua Bengio, Aaron Courville 0262035618 9780262035613

Original price was: $50.00.$25.00Current price is: $25.00.

Authors:Ian Goodfellow; Yoshua Bengio; Aaron Courville , Series:Artificial Intelligence [24] , Tags:Computers; Artificial Intelligence; General; Computer Science , Author sort:Goodfellow, Ian & Bengio, Yoshua & Courville, Aaron , Ids:Google; 9780262035613 , Languages:Languages:eng , Published:Published:Nov 2016 , Publisher:MIT Press , Comments:Comments:An introduction to a broad range of topics in deep learning, covering mathematical and conceptual background, deep learning techniques used in industry, and research perspectives.“Written by three experts in the field, Deep Learning is the only comprehensive book on the subject.â€â€”Elon Musk, cochair of OpenAI; cofounder and CEO of Tesla and SpaceXDeep learning is a form of machine learning that enables computers to learn from experience and understand the world in terms of a hierarchy of concepts. Because the computer gathers knowledge from experience, there is no need for a human computer operator to formally specify all the knowledge that the computer needs. The hierarchy of concepts allows the computer to learn complicated concepts by building them out of simpler ones; a graph of these hierarchies would be many layers deep. This book introduces a broad range of topics in deep learning. The text offers mathematical and conceptual background, covering relevant concepts in linear algebra, probability theory and information theory, numerical computation, and machine learning. It describes deep learning techniques used by practitioners in industry, including deep feedforward networks, regularization, optimization algorithms, convolutional networks, sequence modeling, and practical methodology; and it surveys such applications as natural language processing, speech recognition, computer vision, online recommendation systems, bioinformatics, and videogames. Finally, the book offers research perspectives, covering such theoretical topics as linear factor models, autoencoders, representation learning, structured probabilistic models, Monte Carlo methods, the partition function, approximate inference, and deep generative models. Deep Learning can be used by undergraduate or graduate students planning careers in either industry or research, and by software engineers who want to begin using deep learning in their products or platforms. A website offers supplementary material for both readers and instructors.